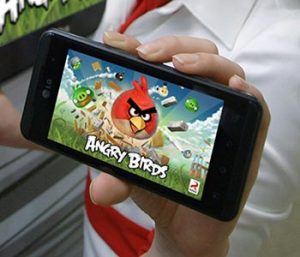

Angry Birds, the game played on smartphones everywhere, is considered suitable for kids as young as four years old—that is, until they see a banner ad showing scantily clad women, provocatively posed.

That’s just one example from Yilu Zhou’s research into the murky world of maturity ratings for applications and ads that run on mobile devices. A professor of information systems in the Gabelli School of Business, Zhou, PhD, is conducting research that could eventually help prevent youngsters from seeing unseemly or shocking things when they pick up a smartphone or tablet.

“Most researchers, when they look at mobile applications, are concerned with privacy issues. They look at what information the apps are collecting—your location information, your contacts, your photos,” she said. “Not many people look at maturity ratings yet. With more than 2 million apps on iTunes and Google Play alone, you can’t simply assume that maturity ratings provided by app platforms are all accurate. So we believe this is an understudied area that we really need to look at.”

Her research is supported by a $237,042 grant from the National Science Foundation’s Secure and Trustworthy Cyberspace initiative. It’s the first Gabelli School research project funded by the NSF.

Like movies and video games designed for game systems, mobile device applications have ratings criteria for maturity. But unlike movies and video games, which are rated by independent agencies, apps have no standard, official ratings guidelines, Zhou said. They can vary from platform to platform, and it’s usually up to the developer to assess the maturity level of an app by referring to simple, vaguely worded guidelines that leave a lot of room for interpretation.

“It’s very hard for the developers themselves to judge” what constitutes graphic violence or other sensitive content, she said. (Apple’s ratings are considered closer to the mark because the company double-checks them, although the ratings can still be questionable, she said.)

The Entertainment Software Rating Board, a nonprofit regulatory body, only checks the maturity ratings of the most popular apps, Zhou said. Meanwhile, the advertisements that accompany the apps are distributed by ad networks that may not take the user’s age into account.

Focusing on a sample of 100,000 to 200,000 apps, Zhou is trying to zero in on those that are most likely to have wrong ratings for violence, sexual content, and language, and find out why.

With help from student workers, she’ll analyze user reviews, app descriptions, developer profiles, and other information, using various techniques—text mining, web crawling, machine learning—for digesting huge amounts of online data and spotting patterns.

Social scientists and legal scholars will be invited to join the project later, she said, since the role of policies and regulations in the selection of maturity ratings will also be analyzed.

The research will be anchored in data that’s considered more of a “gold standard,” like ratings for iOS apps (since they’re reviewed by Apple) and application reviews collected through Amazon’s Mechanical Turk crowdsourcing platform, she said.

Also as part of the project, she and the students will create a simulation that demonstrates the types of advertisements that show up in applications that are rated as safe for young children.

Her work could eventually lead to models or other tools for identifying apps that are more likely to be mislabeled, or even a new online store for apps that are reliably rated as safe for kids, she said.

But for now, she said, “our major goal is to study this phenomenon [and]alert the general public” about it, she said.